Hanlon’s Razor: Malice or Incompetence? Misattributions in Organizational Dynamics

- Moksha Sharma

- Nov 17, 2025

- 6 min read

A Flight Path Misread

It was 1943, deep in the Allied air campaign over the Mediterranean. The sky above southern Italy was a patchwork of clouds and smoke, a fragile ceiling over a continent still split between advancing Allied forces and retreating Axis lines. A squadron of Allied bombers climbed through the haze on a mission meant to weaken German supply routes feeding the Gustav Line - the kind of sortie that could tilt an entire campaign by inches.

As the formation tightened into attack position, one aircraft suddenly drifted several degrees off course.

On the ground, at a forward command post near Bari, alarms flared. Officers hunched over creased maps, radios crackled with half-heard commands, and tension snapped through the room. The drift wasn’t catastrophic - not yet - but even small deviations over contested airspace could spiral into friendly fire incidents, exposure to enemy batteries, or a compromised bombing run.

Whispers spread quickly. Was it a navigational lapse? A failing instrument? Or, in the darkest corners of imagination, sabotage?

In the cockpit, the pilot was fighting turbulence and a set of instruments that suddenly refused to agree with one another. The horizon wobbled; the compass needle jittered. The co-pilot suggested recalibrating, but both hesitated - any correction at the wrong moment could make things worse. And under wartime pressure, even minor uncertainties feel sinister.

Meanwhile, command was spiralling into its own narrative. Officers debated contingencies, overinterpreted radio static, and sketched out impossible theories faster than they could verify them. Assumptions hardened into suspicion. A deviation of a few degrees had ballooned into imagined treachery.

Then came the truth; painfully ordinary.

A simple compass misalignment, caused by a previous hard landing, had knocked the heading indicator out of calibration. Barely noticeable, but enough to divert the aircraft from formation. A quick in-air adjustment corrected the drift, the plane re-joined the squadron, and the mission continued without incident.

No malice. No sabotage. No negligence. Just an imperfect machine and a very human overreaction.

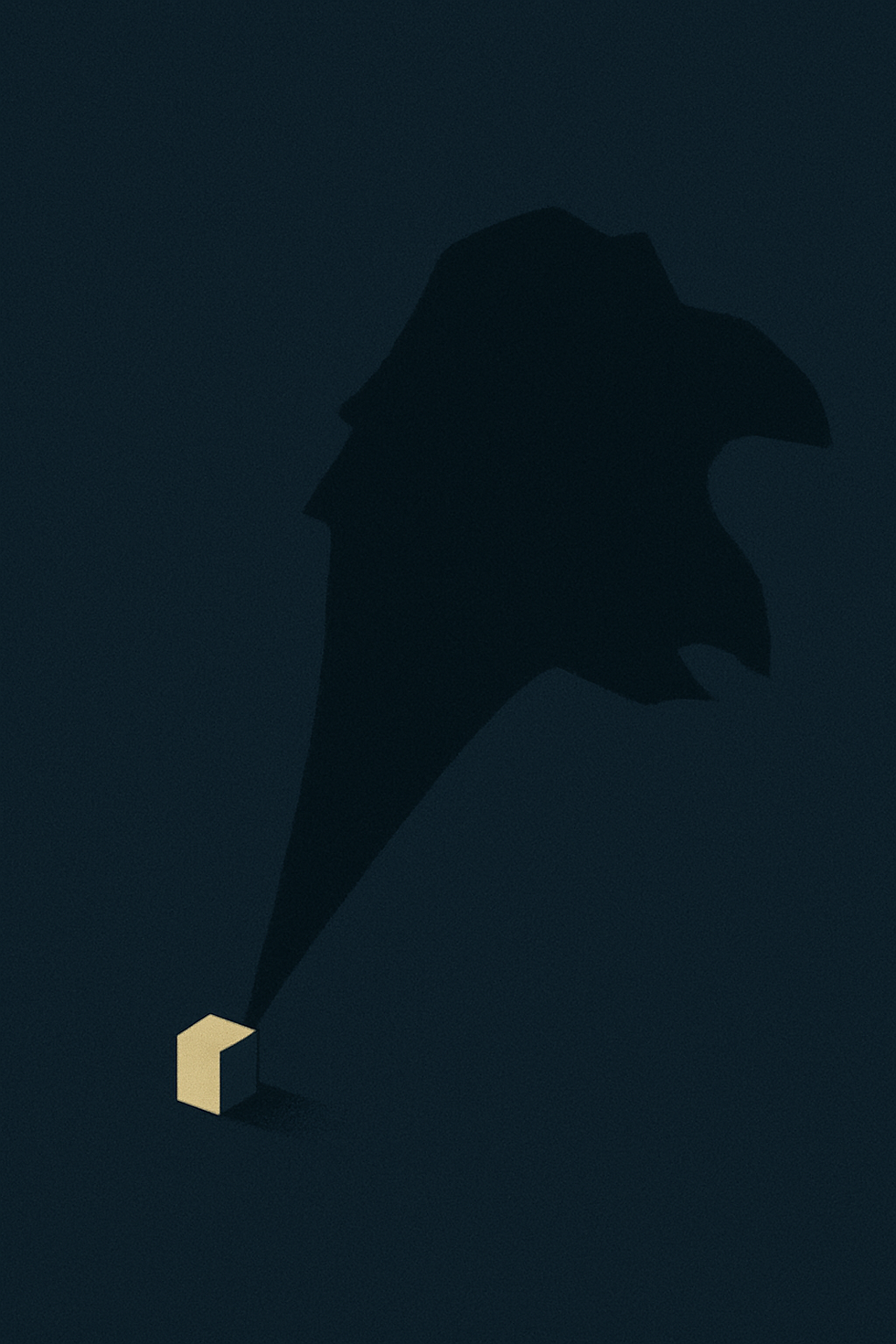

And that’s exactly where Hanlon’s Razor lives: never attribute to malice what can be adequately explained by error. The incident wasn’t remarkable because something went wrong - things go wrong in war all the time. It was remarkable because of how quickly the human mind filled in the gaps with the worst possible explanation. The drift wasn’t a conspiracy. It was a misaligned compass.

The danger wasn’t in the error - it was in the assumptions layered on top of it.

When the Mind Sees Villains Everywhere

Humans are wired to detect patterns and threats. Evolution rewarded those who assumed the worst when stakes were high. In modern organizations, this instinct manifests as misattribution: blaming a colleague for a missed deadline, suspecting a team of sabotage, or assuming a partner’s motives are hostile.

Even minor misunderstandings can spiral. A project manager who misreads a client’s instructions might suddenly be labelled negligent. A missed email becomes evidence of malice. Misreading intentions carries real costs - teams fracture, energy is wasted on conflict, and the root problem - the one that could actually be solved, remains ignored.

Hanlon’s Razor encourages leaders to pause, gather facts, and consider whether a simple mistake or system failure could explain the outcome before jumping to judgment. In doing so, they avoid letting instinctual suspicion become a self-fulfilling crisis.

Trust Erodes Faster Than Error

The consequences of misattribution are rarely trivial. A delayed report, a miscommunicated client instruction, or a technical glitch misread as deliberate harm can spiral into organizational tension. Rumours flourish, morale declines, and collaboration falters. Small errors become crises when intentions are misread.

For example, in a mid-sized investment firm, an analyst submitted a report late due to misaligned data from an external provider. Leadership initially suspected negligence or even deliberate obstruction. Emails flew, meetings were called, and energy was spent on defending positions rather than resolving the underlying issue - the data misalignment. Only after careful verification was the real cause revealed.

Whether in corporate settings, investing, or leadership, assuming malice first wastes attention and resources. Most failures aren’t plots - they’re errors dressed in circumstance. Recognizing this distinction preserves operational focus and morale.

When Errors Aren’t Evil

Not all errors are simple. Many stem from systemic complexity: ambiguous responsibilities, unclear workflows, or flawed tools. A misfiled regulatory document, a delayed deployment, or a misrouted client request may reflect these issues rather than personal negligence.

Hanlon’s Razor doesn’t excuse incompetence. Instead, it provides perspective: understanding whether a failure is structural or personal allows leaders to respond effectively, rather than punishing perceived ill intent and eroding trust.

Consider a software team that experiences repeated minor outages. Blaming a specific developer for “sabotage” would ignore process flaws, misconfigured servers, or communication gaps. Applying Hanlon’s Razor shifts focus to solving the actual problem.

A Business Misread: BNP Paribas

Not all misattribution happens in the cockpit or on a farm, sometimes it plays out on the trading floor. In December 2015, BNP Paribas executed a series of trades that went largely unnoticed for several days. The cumulative mispricing amounted to around €163 million, sending ripples of concern through the bank and the wider market.

Initial reactions were predictable: negligence, incompetence, or even deliberate manipulation. Analysts and observers speculated about rogue traders or intentional misreporting. Internal tension spiked; managers worried about regulatory scrutiny and reputational damage. Panic magnified the error’s perceived gravity.

However, deeper investigation revealed a far simpler explanation. The root cause was systemic failure in trade booking and recognition, not malice. Thousands of transactions had bypassed standard oversight due to a lapse in internal controls, creating the illusion of deliberate wrongdoing. Once discovered, the bank quickly corrected the error and strengthened its procedures, but the initial assumption of ill intent had already cost time, stress, and organizational energy.

This example shows Hanlon’s Razor in action within high‑stakes business contexts: the simplest explanation - operational error or process failure; often outstrips the dramatic assumption of malice. Leaders who pause to investigate before jumping to conclusions preserve trust, reduce wasted effort, and respond effectively to real problems.

How to Apply Hanlon’s Razor in Practice

Pause before assigning blame – Verify facts and context; avoid reacting to assumptions.

Consider simple explanations – Ask whether human error, miscommunication, or systemic issues could explain the outcome.

Focus on solutions, not villains – Address the root problem instead of hunting for someone to punish.

Communicate clearly – Make intent and responsibilities explicit to prevent misunderstandings.

Foster a culture of competence and trust – Encourage reporting of mistakes and learning rather than fear of reprisal.

Document lessons learned – Use errors to improve processes, not punish individuals.

Encourage questioning assumptions – Teach teams to ask, “Could this really be malice, or is there another explanation?”

Seeing Straight Through Chaos

Applied thoughtfully, Hanlon’s Razor sharpens judgment and protects organizational culture. Leaders who assume competence first and explore simple explanations preserve trust, focus on the real problem, and channel energy toward solutions.

Even in high-stakes modern contexts, small errors can appear catastrophic. A software deployment misstep misread as sabotage, or an unexpected market movement interpreted as negligence, can escalate tension unnecessarily. Teams that pause, verify, and investigate rather than assume ill intent maintain resilience and clarity.

A Comically Simple Fix

In the WWII incident, a drifting bomber nearly set off a chain reaction of suspicion, stress, and strategic confusion. Yet the cause turned out to be painfully ordinary - a slightly misaligned compass from a rough landing. What initially looked like sabotage or negligence dissolved into a technical hiccup the moment someone bothered to check the most basic variable.

That simplicity is almost comical in contrast to the panic it inspired. And that’s the quiet power of Hanlon’s Razor at work: most of the drama was human-made, not event-made.

The lesson translates cleanly beyond wartime flight paths. In businesses, teams, and everyday organizational dynamics, what feels like ill intent is often just miscommunication, process failure, or a garden-variety oversight. When leaders default to malice, they escalate; when they default to curiosity, they solve.

Recognizing error for what it is - early, calmly, and without theatrics - preserves trust, protects morale, and keeps operations grounded in reality. And in high-stakes environments, that clarity can be the difference between spiralling into the wrong story and fixing the right problem.

Most Mistakes Aren’t Villains

Hanlon’s Razor isn’t about excusing failure - it’s a discipline of perception: distinguishing intent from error, protecting trust, and responding to problems rationally. The principle reminds us that most “villains” aren’t plotting, they’re simply erring in plain sight.

In organizations, as in war, misjudged intentions can amplify risk unnecessarily. Leaders who see clearly, investigate patiently, and assume competence first are better equipped to solve problems and maintain resilient, cohesive teams.

In the end, the real threat is not malice, it’s our tendency to see it where it isn’t.

Comments